The Dark Side of Memes

A meme, for the uninitiated, is not just a cat joke on the internet.

A meme is an idea or behavior that spreads and evolves as people interact with them, often through the internet. Memes can make you laugh. Memes can make you scared. They can be a benign source of entertainment or engineered to promote information that is false. When the latter occurs online, it exploits human fallibilities and can spread disinformation like wildfire.

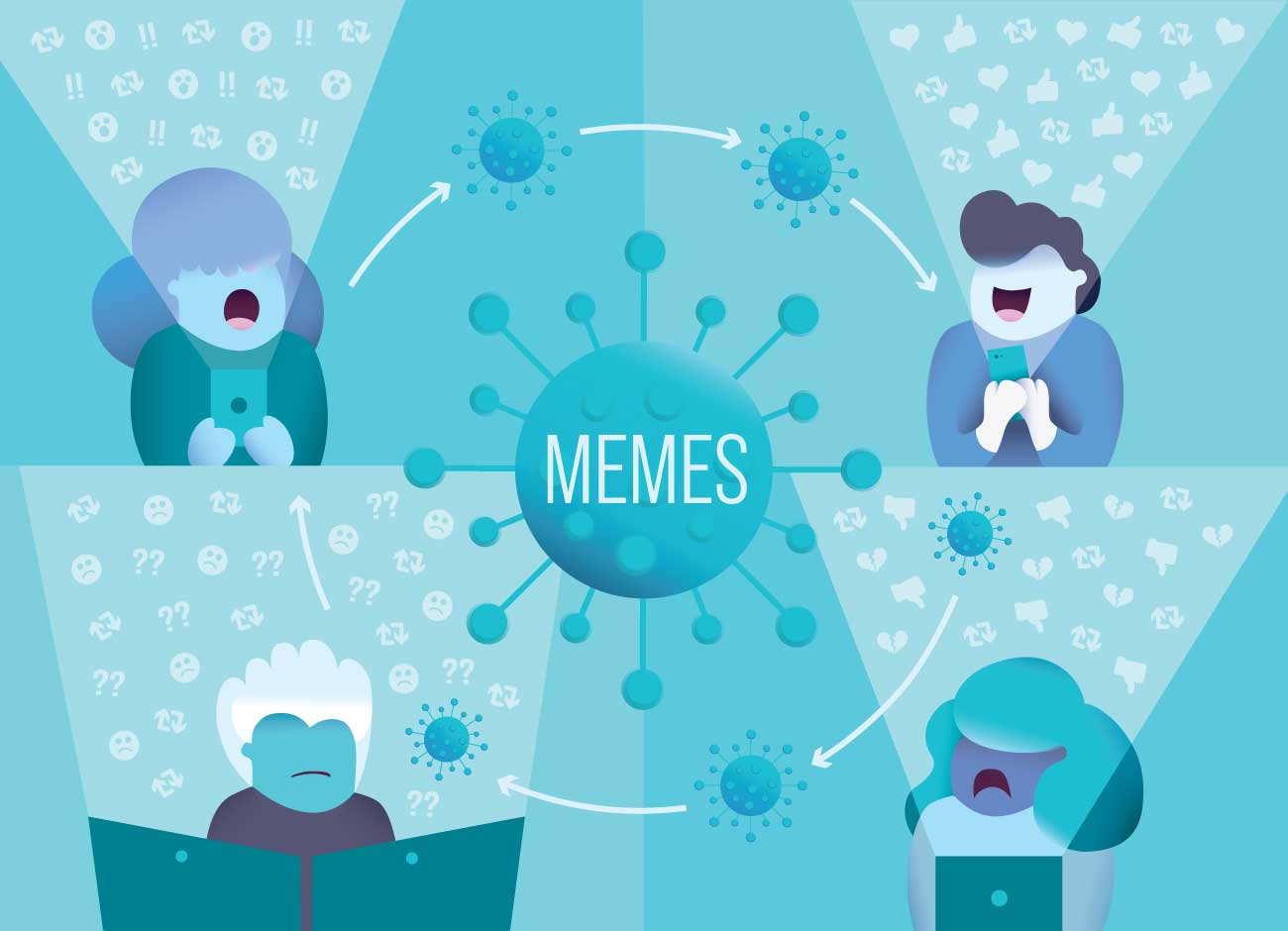

In September, Utah State University computer science professor Nick Flann gave a Science Unwrapped talk titled “Meme Menace: How Disinformation Spreads on Social Media,” comparing internet memes to biological viruses that infect a host’s mind and replicate via the share button.

“They spread and influence humans,” Flann said. “It’s not just fun, it can actually change your behavior and some of the outcomes of society, perhaps. Memes thrive in the world because we have the internet and because we have advanced artificial intelligence (AI).”

Viruses infiltrate a host’s cells to make its body work for them. The difference between biological viruses and internet memes is intent. Viruses don’t have an agenda beyond evolving to survive; internet memes, on the other hand, do. Memes can be jokes that emerge and evolve organically by social media users or they can be architected using AI techniques to trick people into trusting the information and sharing it across their networks. Disinformation delivered via memes becomes more sinister when we start changing our behavior.

“It has tremendous power to influence the future,” Flann said.

He described a potential scenario where a bad actor targets likely supporters of a political candidate on social media with a meme containing false information about their polling station. The goal: reduce turnout and swing the election to another candidate.

“A lot of people in those days really saw the internet as having real great potential for good. It gave voices to people who weren’t part of the establishment. And that’s a good thing … It’s still there. It’s just everybody is yelling.” – Nick Flann

“Imagine this is not just happening on election day, but every day,” Flann said.

Memes were not always powerful disruptors of democracy. The origin of the modern meme was introduced in 1976 by evolutionary biologist Richard Dawkins who described mimemes as a new type of replicator in human culture in his book The Selfish Gene.

“Just as genes propagate themselves in the gene pool by leaping from body to body via sperms or eggs, so memes propagate themselves in the meme pool by leaping from brain to brain,” Dawkins wrote.

Flann bought the book a year after it was published and still keeps it on his bookshelf.

“It was ahead of its time,” Flann says during a Zoom chat in February.

In the late 70s, the internet as we know it did not exist. Although the idea of wireless communication and connected information networks was theorized for decades prior, the internet was clunky by today’s standards and node-to-node communication was limited. It would be 22 years before Google emerged as a simple search engine and nearly 30 years before a nascent Facebook was born in a Harvard dorm room.

“A lot of people in those days really saw the internet as having real great potential for good,” Flann says. “It gave voices to people who weren’t part of the establishment. And that’s a good thing.”

He recalls telling his wife that dictators won’t be able to get away with disappearing people and hiding atrocities because reporters will be able to find evidence through photos shared online.

“So that was a good thing,” Flann says. “It’s still there, it’s just everyone is yelling I suppose.”

A new ecosystem for disinformation

In the early days of the internet, there were no rules governing user behavior. There still isn’t a codified set of international laws on information integrity. Eventually ads appeared on web sites and search engines. People connected with people they otherwise wouldn’t—for good and for bad—and to their ideas. As the internet went mainstream, it also created a new ecosystem for disinformation to thrive.

Some of it is the replication rate, Flann explains.

Think of biological viruses like COVID-19 and its new variant strains. The more copies of the virus in the environment, the more successful it will likely be. The same can be seen online with the viral spread of disinformation.

“Social media has enabled such rapid spread, it’s based on the speed of infection how quickly this meme can spread from one person to another,” Flann says. “And then there is the branching factor—how many people it can infect. By reducing the time and increasing the number of people infected we really speed up that potential exponential growth.”

The internet changed how we consume media, and by extension, ideas. The rise of AI technology has further altered what ideas touch us. Deepfakes—realistic images, videos, or audio files—are derived using generative adversarial networks that compete to create a convincing fake.

“Soon we will be able to make deepfakes that can make people do anything,” Flann warned during his Science Unwrapped talk, adding that it could be videos depicting people as terrorists, showing them doing drugs, naked, or mapped them into Nazi demonstrations. “All those things are possible.”

Flann argues that initiatives to develop AI technology that can detect deepfakes is futile. Using the same technology to subvert deepfakes will lead to better deepfakes and an internet arms race—one that no longer takes years to develop destructive technology, but mere days.

And perhaps more worrying is the idea that dangerous memes don’t have to be state-sponsored acts of aggression, amplified by bots, and unleashed to an unsuspecting population to be harmful. Sometimes, the most dangerous information is the media we don’t consume. During his talk, Flann pointed to one very simple—and powerful—meme: those labeled fake news.

“It can kill off hard won information and eliminate its consideration from so many people’s minds that they just dismiss it,” he said.

How do we architect our way out of this mess of our own creation? Some policymakers have called for regulating social media. Education about internet literacy has limitations. Flann suggests we dissect the problem as though it were a virus like COVID-19. Both biological viruses and disinformation memes operate on the same principle of taking advantage of vulnerabilities in their human hosts. We fight viruses like Polio and COVID-19 with vaccines. Why not try to protect people with immunity against dangerous memes?

“If we are going to protect against this, we have to tap into the same mechanisms and we have to acknowledge these same vulnerabilities in ourselves,” Flann says.

However, that may prove to be a heavy lift.

“The vulnerabilities that were built into us through evolution were actually benefits back in the old days when we could be open to ideas and be influenced by others. That was good because that is how we worked out language, and learning, and transfer of skills … helpful when we were just developing original human societies. But now we have just built those tools that exploit all of those vulnerabilities. Because people can be manipulated.” – Nick Flann

When Dawkins first introduced the idea of cultural memes, they were largely ignored—even considered offensive to some people with strong convictions, Flann says. The idea that a belief one may hold could stem from a cultural meme is disturbing.

“It’s a cherished notion that everything we do and think and say is from us and we own that,” Flann says, adding that people don’t want to think of themselves as “a host to an idea.”

He suggests we need to be open to the idea of memes if we are to combat the threat some carry.

“The vulnerabilities that were built into us through evolution were actually benefits back in the old days when we could be open to ideas and be influenced by others,” Flann says. “That was good because that is how we worked out language, and learning, and transfer of skills … helpful when we were just developing original human societies. But now we have just built those tools that exploit all of those vulnerabilities. Because people can be manipulated.”

Perhaps, Flann wonders, we can inoculate people against dangerous memes promoting harmful conspiracy theories through early exposure and training. It’s a concept borrowed from some deradicalization programs developed to fight violent terrorism. Think of it as a vaccine that generates antibodies to fight an invader’s strength and ability to influence us, he says. “That same mode of operation with memes makes a lot of sense to me. And I think that could be a very powerful approach.”

Flann believes that dangerous memes are no longer something that can be shrugged off and that without some type of intervention, humankind may be on a slow march toward an Orwellian future considering the invisible internet strings pulling on our society.

“Our free will could be taken from us without any need for force or torture, it could be taken from us through the process of these memes that are taking over our mind planting ideas and taking over our behavior,” he said during his presentation last fall. “The remarkable thing about it is, it is so subtle. In fact, we wouldn’t even know it was happening to us and yet it could happen.”

“I think it’s worthwhile to think about our convictions,” he said. “Where do our convictions come from? And our convictions drive our character; they drive our behavior. How do we decide how to believe them?”

Detecting conspiracy theories

The stories we repeat can help tell us who we are as a society and how we are feeling. One person who has spent decades thinking about the stories we share is Jeannie Thomas, professor of English and co-founder of USU’s Digital Folklore Project. She likens folklorists to a type of detectorist who can easily spot legends, rumors, and conspiracy theories floating in everyday conversations and lurking in posts online because they are trained at recognizing narrative patterns and motifs.

In other words, folklorists have a have a pretty good “BS detector” or “legend detector,” Thomas explained in an interview over Zoom in February.

That ability to recognize a story for what it is could prove invaluable for public health and the healthy of democracies worldwide. In the early days of the COVID-19 pandemic, when little was known about how the virus spread and who was most at risk and various countries established lockdowns, Thomas understood the climate was ripe for rumors, legends, and conspiracy theories about it to flourish. She wondered how she could impart her decades of folklore training to the masses. “Instead of giving people the fish, how can I teach people to fish?”

She wanted to equip people with the skills to sift through the stories delivered to their digital doorsteps and determine whether what they reeled in is something they should toss or take home.

Because “lies travel faster on the web than truth does,” she says. “Lies are much easier to monetize, too.”

The idea often attributed to Mark Twain that “a lie is halfway around the world while truth is still putting on its boots” seems to square with misinformation today. A 2018 study by MIT researchers found falsehoods spread six times faster on Twitter.

“Knowing more about legends, rumors, and conspiracy theories can help us.” – Jeannie Thomas

A version of the old boots adage goes back to at least Jonathan Swift, Thomas says, “which tells me lies have always been problematic because they are sexy and they are shocking and they get our attention. They are sticky. We remember them because of that. But the difference today is those boots have jet propulsion and can go so much farther and wider.”

The other problem, she notes, is many of these disinformation efforts have an “implied imperative in them—do something! Do something!”

Provide your social security number. Hoard toilet paper. Storm the U.S. Capitol.

But the problem is bigger than Twitter. All social media sites allow some level of misinformation to exist in their ecosystem, Thomas explained in a presentation Jan. 29 for BYU’s William A. Wilson Folklore Archives 2021 Founder’s Lecture. YouTube and Facebook are known for allowing unverified content to proliferate on their platforms, Pinterest and Instagram less so. But disinformation is there, too, along with the incentive to perpetuate them because they gather more engagement from users.

This means people wade through a lot of misinformation on the internet every day, Thomas told the virtual audience. “Knowing more about legends, rumors, and conspiracy theories can help us.”

She developed the SLAP test, “a gut check with some metrics,” for the everyday person to serve as their lodestar for truth. The acronym stands for Scare, Logistics, A-list, and Prejudice, and the technique encourages people to develop caution when approaching new information. Consider it step one of testing the veracity of a narrative. Thomas unveiled the SLAP test during the talk. Here’s how it works:

When encountering a story from a friend or person, online or otherwise, ask yourself:

Does a piece of “news” illicit a strong reaction to shock or scare you? Be wary.

Does an account rely on a farfetched set of logistics to be true? Be wary.

Does the narrative involve A-List celebrities or high-profile companies or events? For instance, is Bill Gates involved? Be wary.

Does the account play into your own confirmation biases or prejudices? Does it demonize some person or group as a bad actor? Be wary

Then consult a real fact checking site—not the comments of random people on a chat board—such as Leadstories.com and factcheck.org.

“If you answer yes to one of these questions, don’t trust the story. At the very minimum, explore it,” Thomas says. “Learn to be suspicious. I think that is helpful because we are also in this day and age where the scam artists, the phishing emails, have gotten so sophisticated I cannot even believe it. … And those push you to act, too.”

The idea is to “slap some sense into yourself,” she says. “Nobody wants to be gullible. I really tried to make it clear and not overly complicated. Scholars, we like to get into the weeds, I was deliberately making it applied … because we need that right now.”

The SLAP test allows people to slow the lie down by stretching the processing time to consider the veracity of the story before passing it on.

“When you get on the internet, know that there are people out there who want to hurt you, who want to take advantage of you, who want to steal you money,” Thomas says. “It’s not all happy cat memes. Those people are there, so steer yourself accordingly, avoid the robbers lurking in the internet woods.”

But even folklorists can be fooled.

In her talk, Thomas detailed how last March, at the beginning of the COVID-19 pandemic in the United States, she passed on an unverified account of martial law being invoked during the lockdown to her sister. It came in the form of a text message from a friend of her son’s whose father is a first responder.

“In our culture, we’ve made it hard to admit when we are wrong,” Thomas says. “I deliberately put in a story where I screwed it up totally because I wanted people to see that it’s okay and I learned a lot.”

We are human. It happens. But to be better the next time, you have to learn something first.

“Let it teach you,” she says. “That is the goal. … Where you learn is when you make those screw-ups. We just have to be okay with that teaching us something.”

The information we share matters

Perhaps one part of the problem is the medium that internet memes are delivered make them easy to dismiss. Instagram is just nice pictures with cool filters. Facebook posts and tweets are ephemeral. You just scroll and move on. With the internet, it’s never been easier to find good information and be inundated with bad intelligence.

“And how do you know the difference?” Thomas asks.

Over the last few years, Thomas watched the rise of the unsubstantiated conspiracy theory QAnon take hold of thousands of everyday Americans. The theory, alleged by an unnamed government insider, pivots around the idea that President Donald Trump is secretly working to bring down a global cabal of child traffickers such as celebrities like Tom Hanks to businessmen including George Soros. For the legends scholar, the rise of QAnon wasn’t surprising.

“It’s not a new thing to me,” Thomas says. “Welcome back ’80s and early ’90s—and even the early 20th century. It’s satanism and antisemitism all over again. Same narrative elements, slightly different bad guys, the story lines remain the same but the names change.”

Are we in really bad remake?

“We are. It’s Groundhog Day,” she says.

“One of the things that fascinates me as a scholar is one of their lines is ‘do the research’,” Thomas says. “They think they are. Scrolling through someone else’s posts on your chat board trying to decipher Q’s messages, isn’t’ really research. People don’t know what good research is.”

And political rhetoric has made it harder for people to fact check themselves and their sources.

“All of a sudden you are no longer loyal to your team if you fact check,” Thomas says. “That is just hurting you.”

When COVID-19 emerged, she understood the population was vulnerable to misinformation about the virus that could accelerate anxiety about the pandemic. It fit into a pattern she had seen many times before with witchcraft and legend outbreaks erupting during times of political, economic, and cultural instability, and illness such as smallpox outbreaks.

“We need to be careful about the stories that we tell if we are going to act on them. We better make sure that they are grounded in something that is true.” – Jeannie Thomas

People eventually look for scapegoats to blame, she says. “I knew the legends were just going to spring up like wildfire and that things were going to get more destabilized because the culture is under stress.”

In the early months of COVID-19, many people were home, stressed, and on the internet looking for answers.

“And at the same time people don’t have enough information about a life-threatening circumstance,” Thomas says. “Those are just the perfect circumstances. We are going to narrate that, maybe not directly, but metaphorically, through those legends, rumors and conspiracy theories.”

And these stories can spread doubt about life-saving vaccines. They can disrupt effective public health responses. The information we share matters.

Thomas points to 2005 when President George W. Bush started a robust pandemic response plan—including stockpiling ventilators—during his presidency after reading an advance copy of a book about the 1918 flu pandemic.

“That story mattered,” she says. “We need to be careful about the stories that we tell if we are going to act on them. We better make sure that they are grounded in something that is true.”

Thomas devised the SLAP test as a workaround around for the fact that evidence suggests tackling falsehoods with facts doesn’t seem to sway minds, only further entrench them. The SLAP test provides people a path to uncover the truth for themselves.

After her presentation, one virtual attendee reveled in the idea of SLAP “fomenting infectious humility.”

We could all likely use a dose of it.

Bridger November 8, 2023

Cool